This is multimodal AI in action.

For years, AI models operated in silos. Computer vision models processed images. Natural language models handled text. Audio models transcribed speech. Each was powerful alone, but they couldn’t talk to each other. If you wanted to analyze a video, you’d need separate pipelines for visual frames, audio tracks, and any text overlays, then somehow stitch the results together.

What Is Multimodal AI?

Multimodal AI systems process and understand multiple data types simultaneously — text, images, video, audio — and crucially, they understand the relationships between them.

- Text: Natural language, code, structured data

- Images: Photos, diagrams, screenshots, medical imagery

- Video: Sequential visual data with audio and temporal context

- Audio: Speech, environmental sounds, music

- GIFs: Animated sequences (underrated for UI tutorials and reactions

How Multimodal Systems Actually Work

Think of it like a two-person team: One person describes what they see (“There’s a red Tesla at a modern glass building, overcast sky, three people in business attire heading inside”), while the other interprets the context (“Likely a corporate HQ. The luxury EV and professional setting suggest a high-level business meeting”).

Modern multimodal models work similarly — specialized components handle different inputs, then share information to build unified understanding. The breakthrough isn’t just processing multiple formats; it’s learning the connections between them.

In this guide, we’ll build practical multimodal applications — from video content analyzers to accessibility tools — using current frameworks and APIs. Let’s start with the fundamentals.

How Multimodal AI Works Behind the Scenes

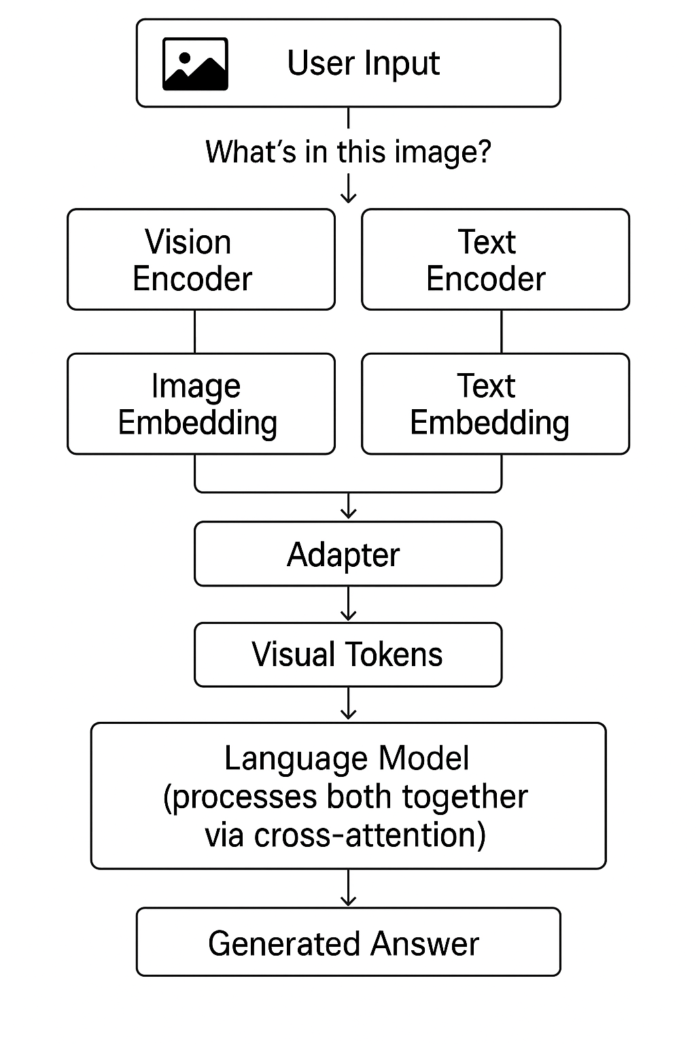

Let’s walk through what actually happens when you upload a photo and ask, “What’s in this image?”

The Three Core Components

1. Encoders: Translating to a Common Language

Think of encoders as translators. Your photo and question arrive in completely different formats —pixels and text. The system can’t compare them directly.

Vision Encoder: Takes your image (a grid of RGB pixels) and converts it into a numerical vector —an embedding. This might look like [0.23, -0.41, 0.89, 0.12, ...] with hundreds or thousands of dimensions.

Text Encoder: Takes your question “What’s in this image?” and converts it into its own embedding vector in the same dimensional space.

The key: These encoders are trained so that related concepts end up close together. A photo of a cat and the word “cat” produce similar embeddings — they’re neighbors in this high-dimensional space.

2. Embeddings: The Universal Format

An embedding is just a list of numbers that captures meaning. But here’s what makes them powerful:

- Similar concepts have similar embeddings (measurable by cosine similarity)

- They preserve relationships (king – man + woman ≈ queen)

- Different modalities can share the same embedding space

When your image and question are both converted to embeddings, the model can finally “see” how they relate.

3. Adapters: Connecting Specialized Models

Here’s where it gets practical. Many multimodal systems don’t build everything from scratch — they connect existing, powerful models using adapters.

What’s an adapter? A lightweight neural network layer that bridges two pre-trained models. Think of it as a translator between two experts who speak different languages.

Common pattern:

- Pre-trained vision model (like CLIP’s image encoder) → Adapter layer → Pre-trained language model (like GPT)

- The adapter learns to transform image embeddings into a format the language model understands

This is how systems like LLaVA work — they don’t retrain GPT from scratch. They train a small adapter that “teaches” GPT to understand visual inputs.

Walking Through: Photo + Question

Let’s trace exactly what happens when you ask, “How many people are in this photo?”

Step 1: Image Processing

Your photo → Vision Encoder → Image embedding [768 dimensions]

The vision encoder (often a Vision Transformer or ViT) processes the image in patches, like looking at a grid of tiles, and outputs a rich numerical representation.

Step 2: Question Processing

"How many people are in this photo?" → Text Encoder → Text embedding [768 dimensions]

Step 3: Adapter Alignment

Image embedding → Adapter layer → "Visual tokens"

The adapter transforms the image embedding into “visual tokens” — fake words that the language model can process as if they were text. You can think of these as the image “speaking” in the language model’s native tongue.

Step 4: Fusion in the Language Model

The language model now receives:

[Visual tokens representing the image] + [Text tokens from your question]

It processes this combined input using cross-attention — essentially asking: “Which parts of the image are relevant to the question about counting people?”

Step 5: Response Generation

Language model → "There are three people in this photo."

Why This Architecture Matters

Modularity: You can swap out components. Better vision model released? Just retrain the adapter.

Efficiency: Training an adapter (maybe 10M parameters) is far cheaper than training a full multimodal model from scratch (billions of parameters).

Leverage existing strengths: GPT-4 is already great at language. CLIP is already great at vision. Adapters let them collaborate without losing their individual expertise.

Real-World Applications That Actually Matter

Understanding the architecture is one thing. Seeing it solve real problems is another.

Healthcare: Beyond Single-Modality Diagnostics

Medical diagnosis has traditionally relied on specialists examining individual data types —radiologists read X-rays, pathologists analyze tissue samples, and physicians review patient histories. Multimodal AI is changing this paradigm.

Microsoft’s MedImageInsight Premium demonstrates the power of integrated analysis, achieving 7-15% higher diagnostic accuracy across X-rays, MRIs, dermatology, and pathology compared to single-modality approaches. The system doesn’t just look at an X-ray in isolation — it understands how imaging findings relate to patient history, lab results, and clinical notes simultaneously.

Oxford University’s TrustedMDT agents take this further, integrating directly with clinical workflows to summarize patient charts, determine cancer staging, and draft treatment plans. These systems will pilot at Oxford University Hospitals NHS Foundation Trust in early 2026, representing a significant step toward production deployment in critical healthcare environments.

The implications extend beyond accuracy improvements. Multimodal systems can identify patterns that span multiple data types, potentially catching early disease indicators that single-modality analysis would miss.

E-commerce: Understanding Intent Across Modalities

The retail sector is experiencing a fundamental transformation through multimodal AI that understands customer intent expressed through images, text, voice, and behavioral patterns simultaneously.

Consider a customer uploading a photo of a dress they saw at a wedding and asking, “Find me something similar but in blue and under $200.” Traditional search requires precise keywords and filters. Multimodal AI understands the visual style, color transformation request, and budget constraint in a single query.

Tech executives predict AI assistants will handle up to 20% of e-commerce tasks by the end of 2025, from product recommendations to customer service. Meta’s Llama 4 Scout, with its 10 million token context window, can maintain a sophisticated understanding of customer interactions across multiple touchpoints, remembering preferences and providing genuinely personalized experiences.

Content Moderation: Evaluating Context, Not Just Content

Content moderation has evolved from simple keyword filtering to sophisticated context-aware systems that evaluate whether content violates policies based on the interplay between text, images, and audio.

OpenAI’s omni-moderation-latest model demonstrates this evolution, evaluating images in conjunction with accompanying text to determine if content contains harmful material. The system shows a 42% improvement in multilingual evaluation, with particularly impressive gains in low-resource languages such as Telugu (6.4x) and Bengali (5.6x).

Accessibility: Breaking Down Digital Barriers

Multimodal AI is revolutionizing accessibility by creating systems that can process text, images, audio, and video simultaneously to identify and remediate accessibility issues in real-time.

What Actually Goes Wrong at Scale

The technical literature often glosses over practical difficulties that trip up real implementations:

-

Data alignment is deceptively difficult. Synchronizing dialogue with facial expressions in video or mapping sensor data to visual information in robotics requires precision that can fundamentally corrupt your model’s understanding if done incorrectly. A 100-millisecond audio-video desynchronization might seem trivial, but it can teach your model that people’s lips move after they speak.

-

Computational demands are substantial. Multimodal fine-tuning requires 4-8x more GPU resources than text-only models. Recent benchmarking shows that optimized systems can achieve 30% faster processing through better GPU utilization, but you’re still looking at significant infrastructure investment. Google increased its AI spending from $85 billion to $93 billion in 2025 largely due to multimodal computational requirements.

-

Cross-modal bias amplification represents an insidious challenge. When biased inputs interact across modalities, effects compound unpredictably. A dataset with demographic imbalances in images combined with biased language patterns can create systems that appear more intelligent but are actually more discriminatory. The research gap is substantial — Google Scholar returns only 33,400 citations for multimodal fairness research, compared with 538,000 for language model fairness.

-

Legacy infrastructure struggles. Traditional data stacks excel at SQL queries and batch analytics but struggle with real-time semantic processing across unstructured text, images, and video. Organizations often must rebuild entire data pipelines to support multimodal AI effectively.

What’s Coming: Trends Worth Watching

-

Extended context windows of up to 2 million tokens reduce reliance on retrieval systems, enabling more sophisticated reasoning over large amounts of multimodal content. This changes architectural decisions—instead of chunking content and using vector databases, you can process entire documents, videos, or conversation histories in a single pass.

-

Bidirectional streaming enables real-time, two-way communication where both human and AI can speak, listen, and respond simultaneously. Response times have dropped to 0.32 seconds on average for voice interactions, making the experience feel genuinely natural rather than transactional.

-

Test-time compute has emerged as a game-changer. Frontier models like OpenAI’s o3 achieve remarkable results by giving models more time to reason during inference rather than simply scaling parameters. This represents a fundamental shift from training-time optimization to inference-time enhancement.

-

Privacy-preserving techniques are maturing rapidly. On-device processing and federated learning approaches enable sophisticated multimodal analysis while keeping sensitive data local, addressing the growing concern that multimodal systems create detailed personal profiles by combining multiple data types.

The Strategic Reality

The era of AI systems that truly understand the world, rather than just processing isolated data streams, has arrived. It’s time to build accordingly